Analog or Digital Pull Tester: Choosing the Best Option for Your Needs

When choosing between an analog or digital gauge for your pull tester, several factors come into play that can significantly impact decision-making. A digital gauge generally offers higher accuracy and precision, making it the preferred choice for those who prioritize exact measurements. Conversely, analog gauges may appeal to users seeking a more cost-effective solution without sacrificing functionality.

Calibration differences also set these two types apart. Digital gauges often provide easier calibration procedures and incorporate advanced features that facilitate data reading, which can be beneficial for rigorous testing environments. Meanwhile, analog gauges typically require manual.

Ultimately, the right selection depends on the specific needs of the user, including budget, measurement accuracy, and operational requirements. Understanding these aspects will help in making an informed decision tailored to the intended application.

Determining Your Needs

Identifying specific requirements is crucial in selecting between analog and digital gauge pull testers. Essential considerations include the nature of the tension or pressure being measured, as well as the environment in which the equipment will be used.

Assessing Tension and Load Requirements

The choice between analog and digital testers often hinges on the tension and load levels required for a task. Analog gauges typically excel in environments with moderate tension, offering a straightforward visual readout. Conversely, digital testers are more suited for precise measurements in high-tension applications.

Considerations include:

- Maximum Load: Assess the maximum load your application will impose.

- Measurement Accuracy: Digital testers usually provide higher accuracy (0.5% of the range) than analog gauges (2% of the range), beneficial for critical measurements.

- Readability: Digital displays can often be easier to read and avoid misreading

Choosing the right type based on load characteristics can greatly enhance measurement efficiency.

Considerations for Durability and Environment

Durability is a key factor in selecting a pull tester, especially in challenging environments. Conditions such as extreme temperatures, shocks, or fall can significantly impact equipment longevity.

Key considerations include:

- Material: Select testers made from materials resistant to rust and degradation.

- Temperature Tolerance: Identify equipment rated for the temperature range of your application.

Understanding the operational environment will guide the decision towards a durable solution that performs reliably under the specific conditions.

Technical Specifications and Calibration

Calibration and measurement increments are critical for ensuring accurate readings in both analog and digital gauge pull testers. Understanding these aspects will help users select the right tool for their needs and ensure precision in their measurements.

Understanding Gauge Calibration

Gauge calibration involves adjusting and verifying the accuracy of a measurement instrument against a known standard. For pull testers, this ensures that the force applied is measured accurately, typically in units such as lbs (pounds force) or kN (kiloNewtons)

Calibration should be performed regularly to maintain accuracy. This process often requires specific equipment or certified calibration services. It’s crucial to take note of the manufacturer’s recommendations regarding calibration intervals to prevent drift in measurement. Most of the time it is recommended to calibrate your pull tester once a year.

The Role of Increments in Measurement Precision

Measurement increments or resolution play a significant role in the precision of readings. The smaller the increment, the more detailed and accurate the measurement.

For instance, a gauge that measures in increments of 100lbf provides finer gradations compared to one that measures in increments of 1000lbf. This is particularly important when working with sensitive materials that require exact force applications.

When selecting a pull tester, reviewing the resolution is essential. For the same capacity, for example 1,000lb, a digital pull tester will give increment of 1lb where an anlog pull tester will give increments of 10lb.

Comparing Analog and Digital Gauges

When evaluating analog and digital gauges, key differences emerge in functionality and cost. Understanding these aspects will assist in making an informed choice.

Key Differences in Functionality

Analog gauges utilize a needle and dial to display measurements. They provide a visual representation of data, allowing for quick assessments. But with a high risk of misreading where the needle is positioned.

Digital gauges, on the other hand, offer numerical readings on an electronic display. One of their advantages is precision, as they minimize the potential for user interpretation errors.

Price and Cost-Benefit Analysis

Analog gauges are generally cheaper in terms of initial investment. Their simplicity in design means that production costs are lower, making them accessible for basic tasks.

Digital gauges tend to be more expensive, carrying costs associated with advanced technology and features. However, the investment could be justified through increased accuracy and functionalities that save time and improve productivity. When considering long-term use, the cost-benefit analysis may favor digital models for their reliability and added features despite the higher upfront price.

Usability and Readability

Considerations surrounding usability and readability are crucial when selecting an analog or digital gauge pull tester. The ease with which users can interpret measurements and the clarity of display can significantly affect the effectiveness of these devices.

Ease of Interpretation and Display Clarity

Interpretation of measurements directly affects usability. For analog gauges, clear markings and a well-designed dial can reduce parallax error, allowing for accurate readings. Users should look for gauges with contrasting colors, such as a bright needle against a dark background, enhancing visibility.

Digital gauges often feature easy-to-read displays. Numeric readouts can eliminate ambiguity, while larger displays facilitate quick glances. Some models also provide graphical representations of measurements, aiding in rapid comprehension.

Considering the Impact of User Experience

User experience plays a pivotal role in the usability of testers. Devices that are cumbersome or challenging to read can lead to errors and frustration. Features such as ergonomic grips and intuitive button layouts enhance the overall experience.

Ease of operation is essential. For instance, digital gauges that automatically calibrate can save time and minimize errors. Including user-friendly instructions or visual aids with the device can further improve usability, reducing learning curves and increasing confidence in usage. Users benefit from devices that prioritize clarity and accessibility in design.

Linked products

Pull-out-testing-equipment-for-Roofing-industry

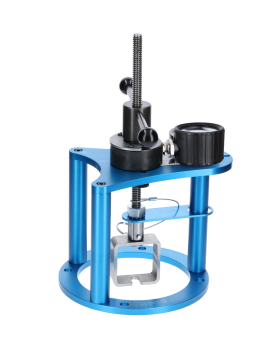

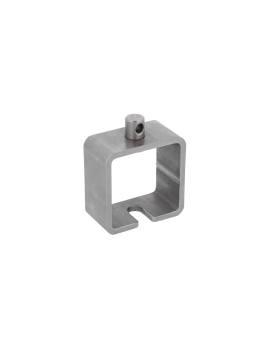

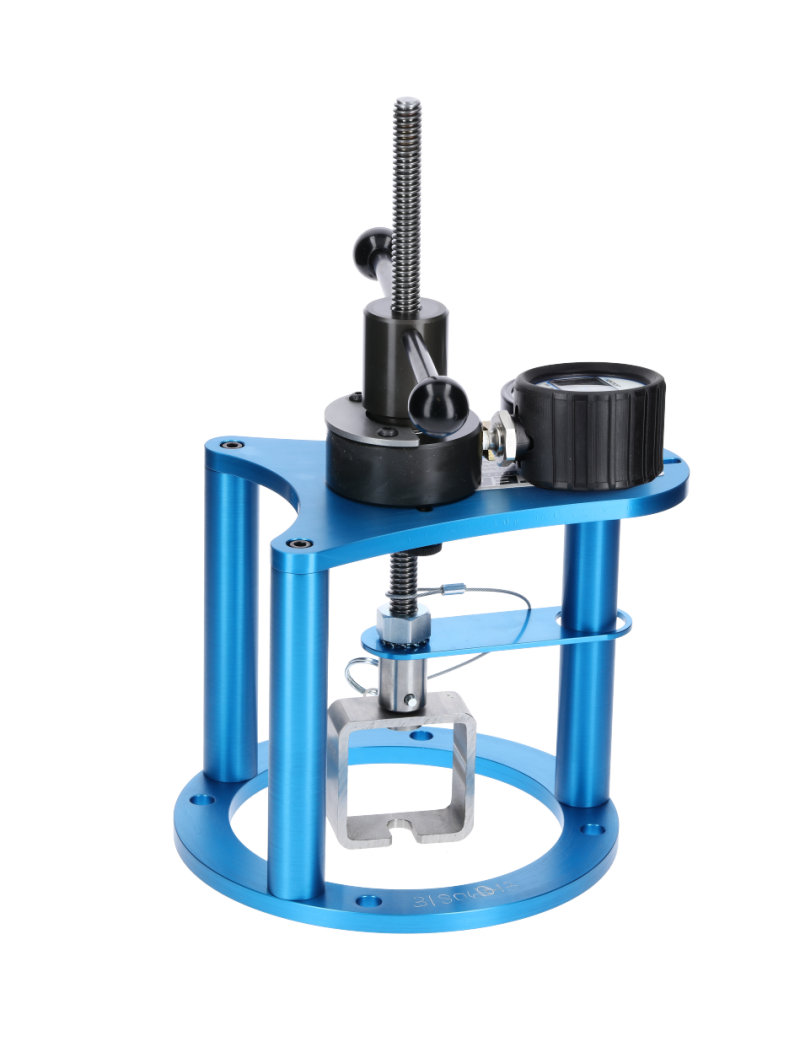

EXTRACTOR D : Digital Pull Tester for screws, anchors, bolts- Full set of accessories for testing fasteners

- Display peak force ...

Pull-out-testing-equipment-for-Roofing-industry

EXTRACTOR A : Analog Pull Tester for roofing screws and fasteners- Full set of accessories for testing fasteners

- Displays peak...

test-equipment-for-adhesion-tests-on-membrane-or-spf-in-construction

ADHOR D : Digital adhesion tester with disposable discs- Measure pull out strength between two layers after a repair or new work ...